号码号段

Data Fabric: A Complete Guide | Architecture, Benefits & Implementation

What is a Data Fabric? Data fabric is an architecture that integrates different data systems and tools. It provides unified access to data stored across various locations to organize, manage, and govern it without moving it to a central database or data warehouse or changing its format. Data fabric relies on metadata to ‘understand’ the data’s structure, lineage, and meaning across various sources. This information enables informed decision-making and optimized data usage.It caters to various applications, including customer insights, regulatory adherence, cloud transitions, data sharing, and analysis. The Importance of Data Fabric Data fabric emerged as a response to the growing challenges of managing data in the modern enterprise. Over the past few decades, organizations have witnessed exponential growth in data volume. This data originates from diverse sources, including traditional databases, customer interactions, social media, and the Internet of Things (IoT) devices. As data sources multiplied, they often became siloed within specific departments or applications. Data gravity—data becoming difficult and expensive to move as it grows in size—was also a significant barrier to consuming data for analytics. The fragmented data landscape made obtaining a unified view of the organization’s information assets difficult. These factors create a need for a solution to bridge the gaps between disparate data sources, simplify access, and ensure consistent governance. Data fabric emerged as an architectural framework that addressed these challenges. It helps businesses use data effectively, regardless of where it’s stored—in the cloud, across multiple clouds, in a hybrid environment, on-premises, or at the edge. It makes data sharing and insight gathering easier by offering a complete 360-degree overview of available data. The key to data fabric is metadata, which, along with machine learning and artificial intelligence (AI), deep data governance, and knowledge management, enables efficient data handling for better business outcomes. The Benefits of Leveraging Data Fabric Data fabric offers businesses many benefits by optimizing self-service data exploration and analytics. It promotes speed and efficiency, which leads to lower costs and more production. Solving the issue of data silos by providing accurate and complete insights from different sources, regardless of the location. Making data easily accessible speeds up the delivery of business value. Ensuring data is trustworthy, secure, and well-managed through automatic governance and knowledge processes. Data fabric empowers users to easily find, understand, and utilize data by providing a unified platform that integrates various data processing techniques and tools, such as batch or real-time processing, ETL/ELT, etc. Data Fabric Architecture The data fabric architecture, with a foundation in metadata and real-time events and an emphasis on easy access to secure and well-managed data, enables automated integration and governance of dispersed data. Building such an architecture goes beyond just setting up a basic app or using certain technologies. It demands teamwork, alignment with business goals, and strategic planning. Data fabric effectively manages metadata, allowing for scalability and automation. This makes the architecture capable of meeting expanding business needs and ready to incorporate new tools and technologies in the future. This architecture can be summarized into multiple layers encompassing various components. 1. Core Layer This layer establishes a metadata management system, essentially a detailed catalog of all the data assets. The catalog provides information about the data’s origin, format, meaning, and usage guidelines. The fabric enforces a set of data governance policies. These policies ensure data quality, consistency, and security across the ecosystem. They define who can access specific data, how it can be used, and establish processes for data lineage (tracking the data’s journey). 2. Integration Layer Using the integration layer, data fabric enables users to access and utilize data seamlessly from various sources, both internal and external. This includes data lakes, databases, cloud storage platforms, social media feeds, and even sensor data from the Internet of Things (IoT). This layer utilizes data transformation tools to clean, standardize, and enrich the ingested data. It involves removing inconsistencies, converting formats (e.g., changing from CSV to a database format), or extracting specific features from the data. It provides a set of APIs (Application Programming Interface), allowing applications and users to access and interact with data from various sources through a consistent interface. 3. Delivery Layer The data fabric architecture features a central data catalog that acts as a searchable repository of all available data assets. It provides detailed descriptions and access controls and facilitates easy discovery of the data users need. Data fabric enforces secure data access control mechanisms. It determines who can access specific data sets and how they can be used, ensuring data privacy and compliance with regulations. Finally, it delivers the prepared data to various applications and users in the required format. This might involve data visualization tools, machine learning algorithms, or business intelligence dashboards. 4. Management and Monitoring Layer Data fabric facilitates quality monitoring throughout the lifecycle by integrating with data quality tools. This monitoring includes identifying and rectifying errors, inconsistencies, or missing values. The architecture leverages performance monitoring tools within the data ecosystem to track processing speeds, identify bottlenecks, and ensure smooth data flow across the system. It prioritizes data security by implementing security measures like encryption, access control, and audit trails. Data Mesh vs. Data Fabric vs. Data Lake: What’s the Difference? Data mesh, data fabric, and data lake are three prominent approaches to managing vast amounts of data spread across diverse sources. They all have distinct roles and functions in data management. Data Lakes Data Mesh Data Fabric Definition It acts as a central repository where organizations can dump raw data from various sources, like databases, social media feeds, and sensor readings. It is a network of self-serving data sources. Each domain within an organization (e.g., marketing, finance) owns and manages its data as a product. It acts as a layer that simplifies data access and management across diverse sources, regardless of location or format. Function A central, low-cost storage solution for vast amounts of data. They are responsible for ensuring data quality, cleaning, and transforming it for use by their specific domain and potentially others. It provides a unified view of the data, allowing users to find and utilize information from various sources through a single interface. Focus They offer flexibility for storing any data, even if it’s unstructured or not immediately usable. Data mesh emphasizes clear data ownership and empowers domain teams to manage their data as a valuable asset. Data fabric focuses on integration and governance by enforcing policies and ensuring data quality, security, and accessibility. Data Ownership Ownership of data in a lake can be unclear. Each domain (department) owns its data and is responsible for its quality, accuracy, and transformation. The data fabric itself doesn’t own the data—it provides the platform for access and governance. Ownership remains with the source. Data Access Finding specific data in a lake requires technical expertise to navigate and access the data. Data access is typically limited to the domain that owns it, ensuring focused utilization. Data fabric offers a unified view and easy access to data from various sources through a central platform. Users can find and utilize data regardless of its original location. Data Fabric Use Cases 1. Data Integration Data fabric helps break down data silos, especially in the finance sector, where it can merge data from various financial systems. It allows data engineers to build compelling data pipelines, improving data access. As a result, finance organizations can get a complete picture of their financial and enterprise data, leading to more informed decision-making. 2. Real-time Data Analytics Data fabric aids organizations in accessing, integrating, and analyzing data almost in real-time. In healthcare, it allows for the analysis of patient data to improve care, treatments, and outcomes. 3. Data Discovery Data discovery is an essential part of business analytics, as it helps control access to the right data. It reveals available data, like the “load” step in traditional ETL (Extract, Transform, Load) processes. The power of the data fabric framework comes from its Data Management layer. This layer covers all other layers, covering security, Data Governance, and Master Data Management (MDM), ensuring efficient and secure data handling. 4. Data Governance With data fabric architecture, organizations can put strong data governance policies in place. This helps them control their data better, ensuring it is accurate, consistent, and secure. For instance, government bodies can benefit from data fabric and help safeguard sensitive information, like personal details. Improving data accuracy and consistency through data fabric can increase the quality of the data, which leads to more reliable data analyses. How to Implement Data Fabric Data Fabric offers a transformative approach to data management, but successful implementation requires careful planning and execution. 1. Data Landscape Conduct a comprehensive inventory of all the data sources, both internal and external. Evaluate the current state of the data and understand how different user groups within the organization access and utilize data. This understanding helps tailor the data fabric to their specific needs and workflows. 2. Data Fabric Strategy Clearly define the objectives to achieve with data fabric implementation. Is it about improving data accessibility, enhancing data security, or streamlining data governance processes? To select a data fabric architecture, consider your organization’s size, data volume, budget, and technical expertise. 3. Data Fabric Platform Choose the appropriate data fabric tools and technologies that align with the chosen architecture and strategy. Integrate data quality and governance practices throughout the implementation process. Data quality ensures the data fabric’s accuracy, consistency, and security from the start. 4. Manage Your Data Connect various data sources into a unified platform. Implement data transformation tools and establish a centralized data catalog to document and organize data assets. 5. Govern the Data Fabric To protect sensitive data, prioritize data security by leveraging data encryption, access controls (role-based access control or RBAC), and audit trails. Establish clear data governance policies that dictate your data fabric’s ownership, access control, and usage guidelines. 6. User Training Design training programs to educate users on accessing and utilizing data within the data fabric platform. Help teams understand of the importance of data quality, responsible data usage, and data security best practices. Risk Associated with Data Fabric While data fabric has multiple advantages for data management, it also introduces new security considerations. Data in Motion During data movement within the data fabric, sensitive information is vulnerable to interception by unauthorized parties. To secure the data throughout this lifecycle, organizations can; Encrypting data at rest (stored) and in transit (being moved) safeguards its confidentiality even if intercepted. Utilize secure communication protocols like HTTPS to establish encrypted connections during data transfer. Access Control Challenges If data fabric is not managed effectively, it can create a single point of failure, where a security breach could grant unauthorized access to a vast amount of data. Grant users only the minimum level of access needed to perform their tasks. Define user roles with specific permissions, restricting access to sensitive data based on job function. Evolving Cyber Threats Data fabric systems must adapt and respond to these evolving cyber threats. Conduct regular testing and assessments to identify and address potential security weaknesses. Implement an SIEM (Security Incident and Event Management) system to monitor security events, detect suspicious activity, and enable a response to potential breaches. Better Data Management with LIKE.TG Data Fabric is a data management architecture for flexibility, scalability, and automation. It is a unified platform to access, integrate, and govern data from diverse sources. While it offers a powerful approach, its success hinges on efficient data integration and transformation. LIKE.TG provides pre-built connectors, data quality management, data governance, and workflow automation to simplify data preparation and ensure high-quality data flows within your data fabric. It seamlessly connects multiple data sources, regardless of format or location, allowing you to remove data silos and gain a complete view of your data. Utilizing metadata, LIKE.TG delivers automation for all your data management needs, including integration, data preparation, data quality, governance, and master data management. Experience LIKE.TG Data Stack with a 14-day free trial orschedule a demo today. Get Started with LIKE.TG Data Stack to Get Unified Data Access Begin your journey with LIKE.TG Data Stack's 14-day free trial. Seamlessly integrate, manage quality, and govern your data for enhanced business insights. Start a Free Trial

What are Database APIs? Why and How are they Used?

Modern applications store a lot of data, yet databases continue to be the primary source of data that these applications need to function. This is where database APIs come in, making it easier for applications and services to retrieve and manipulate data. A database API’s biggest advantage is that it eliminates database operations’ dependence on proprietary methods and provides a unified interface, streamlining data operations. Here’s everything you need to know about database APIs: What is a Database API? A database API comprises tools and protocols that allow applications to interact with a database management system (DBMS). While APIs act as intermediaries between applications or software components, database APIs specifically liaise between applications and DBMSs. Database APIs are mainly used for extracting data and help perform CRUD (Create, Read, Update, Delete) operations or send queries. Why are Database APIs Used? There are three primary reasons for using database API: 1. Security Database APIs feature built-in security features with varying granularities, such as authentication, encryption, and access control. These features improve your database’s security and prevent unauthorized access, data breaches, and abuse. 2. Interoperability Database APIs provide various applications and systems with a standardized means of interacting with a database. This interoperability is necessary when multiple applications require access to the same data. 3. Efficiency Manual querying and data retrieval from a database requires knowledge of SQL, the database schema, and query construction. In comparison, database APIs feature predefined endpoints for querying and data retrieval and use abstraction to greatly reduce these processes’ complexity. Features such as data caching help lower latency for API calls. 4. Abstraction Database APIs’ abstraction enables developers to work with a database without having to understand the minutiae of its functions. This way, developers benefit from a simpler API development process. They can focus on the application they’re developing instead of database management. 5. Consistency Database APIs provide uniform methods for accessing and manipulating data, which ensure consistency in an application’s interactions with the database. Consistency has several facets, as seen below: Types of Database APIs and Their Examples Database APIs are categorized based on the approach, framework, or standard they use to interact with a database. Three prominent categories are: 1. Direct Database APIs Direct database APIs communicate directly with a database, typically using SQL. Examples include: Microsoft Open Database Connectivity (ODBC) Microsoft’s Open Database Connectivity (ODBC) is specially designed for relational data stores and is written in the C programming language. It’s language-agnostic (allows application-database communication regardless of language) and DBMS-independent (lets applications access data from different DBMSs.). Java Database Connectivity (JDBC) Java Database Connectivity (JDBC) is database-independent but designed specifically for applications that use the Java programming language. Microsoft Object Linking and Embedding Database (OLE-DB) OLE-DB allows uniform access to data from a variety of sources. It uses a set of interfaces implemented using the Component Object Model and supports non-relational databases. 2. Object-Relational Mapping (ORM) APIs ORM APIs apply object-based abstraction on database interactions. Examples include: Hibernate Hibernate is an ORM framework for Java that maps Java classes to database tables, enabling SQL queries-less CRUD operation. Entity Framework Entity framework uses .NET objects to create data access layers for various on-prem and cloud databases. Django ORM Django ORM is part of the Python-based open-source Django web framework and lets developers interact with databases using Python code. 3. RESTful and GraphQL APIs APIs in this category use web protocols — usually HTTP — for database interactions and often abstract the database layer to create a more flexible interface. RESTful APIs: REST apis use standard HTTP methods for CRUD operations. GraphQL APIs: These APIs are organized using entities and fields instead of endpoints. Unlike RESTful APIs, GraphQL APIs fetch an application’s required data in a single request for increased efficiency. Key Features of Database APIs 1. Connection Management Database APIs can manage the creation and configuration of connections to a database. Some database APIs have a ‘connection pooling’ feature that saves resources and improves performance by creating and reusing a pool of connections instead of setting up a new connection for every request. Database APIs also handle connection cleanup, which involves safely closing connections after usage to minimize resource wastage and application issues. Connection cleanup boosts efficiency and delivers a smoother user experience. 2. Query Execution Database APIs simplify SQL query and command execution. Applications can send raw SQL queries directly to the database, and database APIs offer specific methods for executing them. Here are a few methods for executing common commands: Execute for DML: For executing INSERT, UPDATE, and DELETE statements. Execute for DDL: For executing schema modification commands like CREATE TABLE. Execute for SELECT: Combined with ‘fetchall’ or ‘fetchone’ for retrieving results. 3. Data Mapping The ORM capabilities in many database APIs map database tables to application objects. Developers can leverage this abstraction and use high-level programming constructs instead of raw SQL to interact with the database. 4. Performance Optimization Caching stores frequently accessed data in memory. This keeps an API from having to repeatedly access a database to fetch the same data. It also leads to faster responses and lowers database load. 5. Schema Management Database APIs can sometimes feature built-in tools for managing schema changes. These tools can simplify the process of modifying the database schema with evolving application requirements. Many database APIs also offer support for migrations (versioned changes to the database schema). Using migrations, you can keep database changes consistent through varying environments. Keeping these changes consistent reduces data duplication and redundancy and improves data accuracy and quality. Vendor-Provided vs. Custom-Built Database APIs Vendor-provided database APIs are developed by the database vendors themselves. Their expertise and knowledge ensure these APIs work seamlessly with their respective database systems, similar to how software suites are designed to work together. Besides compatibility, these database APIs also enjoy official support (including updates, troubleshooting, and technical support) by the vendor and are optimized for performance and efficiency. Examples of Vendor-Provided Database APIs: ADO.NET by Microsoft, Oracle Call Interface (OCI) by Oracle, and the official drivers provided by MongoDB. In contrast, custom-built database APIs are developed in-house — usually to cater to specific organizational requirements or applications. This approach is very flexible in design and functionality. Because these database APIs are built from scratch, business owners can collaborate with developers on customization. They can include features that vendor-provided APIs don’t have and target specific security, organizational, or operational requirements. The organization is responsible for updating and maintaining these database APIs, which can require dedicated personnel and significant resources. However, custom-built database APIs can readily integrate with existing organizational systems and processes. This minimizes disruptions and downtime since the APIs are built with the current systems in mind. Examples of Custom-Built Database APIs: Custom RESTful (Representational State Transfer) APIs, custom ORM layers, and open-source database libraries such as SQLAlchemy. The Benefits of Using (and Building) Database APIs Improved Development and Productivity APIs give developers the freedom to work with the framework, language, or tech stack of their choosing. Since developers don’t have to deal with the minutiae of database interactions, they can focus on writing and refining application logic. This increases their productivity and leads to quicker turnarounds. All API-Related Tasks — Code-Free and at Your Fingertips! Design, deploy, and manage database APIs without writing a single line of code. LIKE.TG API Management makes it possible! Start a FREE Trial Today Enhanced Scalability Scalable database APIs directly impact the scalability of their respective applications. They help a database keep up with growing demands by efficiently managing connections, preventing bottlenecks, and maintaining reliability by implementing principles such as: API Rate Limiting: Rate limiting restricts the number of user requests within a particular timeframe. Doing this eliminates potential overloads and keeps the API stable even during periods of heavy activity. Loose Coupling: The loose coupling principle minimizes the number of dependencies during an interaction between API components. As a result, certain parts can undergo scaling or modification without any major impact on others. Efficient Database Usage: Proper indexing and query optimization can help optimize API-database interactions for consistent performance. These practices can maintain operational efficiency even as an application is scaled. Reusability Once you have a well-designed API, you can reuse its functionality across multiple applications to save resources and time. For example, consider an internal database API that connects an organization’s central employee database with its Employee Management System (EMS). This API can be used for employee onboarding, with tasks such as creating or updating employee records. The same API can find secondary and even tertiary uses in payroll processing (retrieving and updating salary information, generating pay slips) or perks and benefits management (updating eligibility and usage, processing requests). What are The Challenges and Limitations of Using (and Building) Database APIs? Some of the trickier aspects of database API usage include: Greater Complexity In organizations that opt for bespoke database API, business owners and relevant teams must work closely with developers and have an in-depth knowledge of the database and application demands. This increased complexity can prolong development and drive up costs. Frequent Maintenance Database APIs need frequent maintenance to keep them up and running. Besides ensuring smooth operations, these maintenance efforts also serve to debug the database, incorporate new features, and keep the API compatible with system updates. While such maintenance is necessary, it can also take up considerable time and effort. Compatibility Challenges Compatibility issues are less likely to arise in custom-built database APIs since they’re made with the existing systems and infrastructure in mind. However, vendor-built database APIs can encounter glitches, and applications that work with multiple database systems are more vulnerable to them. These compatibility problems can occur due to database-specific features or varying data types. Best Practices for Building and Using Database APIs 1. Intuitive Design The simpler the API design, the easier it is to use. Which features are necessary and which ones are extraneous vary from one API to another, but too many of the latter can crowd the interface and hamper the user experience. 2. Prioritizing Security Building comprehensive authorization and authentication protocols into the API is a great approach to enhance security. You should also encrypt both the in-transit and at-rest data. Lastly, set up periodic audits to proactively find and address security risks in the API. 3. Maintaining Documentation Create and maintain thorough API documentation, covering API methods in detail, discussing error handling procedures, and providing usage examples. Comprehensive, well-structured documentation is an invaluable resource that helps developers understand APIs and use them correctly. 4. Extensive Testing Test the API thoroughly to verify its functionality and efficiency under different operational conditions. Extensive pre-deployment testing can reveal potential issues for you to address. Different types of tests target different areas. Some examples include: 5. Versioning Implementing versioning makes it easier to manage any changes to the database API. Versioning also contributes to backward compatibility — developers can work on newer versions of an API while still using older versions to keep current applications intact. Using No-Code Tools to Build Database APIs No-code tools are an accessible, speedier alternative to conventional, code-driven methods for building database APIs. Such tools offer a visual, drag-and-drop interface with pre-built templates for common use cases. They also feature easy integrations with different tools and services and other APIs for increased functionality. You can use no-code tools to automatically generate RESTful API endpoints or create custom endpoints without coding. How LIKE.TG Helps Build Database APIs When using database APIs, you don’t need to manually query a database to access or retrieve pertinent information. They offer an easier, faster way of working with databases compared to manual methods — making them useful for any business wanting to integrate data into its processes. LIKE.TG’s no-code API builder and designer, LIKE.TG API Management, lets your organization create custom database APIs that you can reuse and repurpose as needed. You can also auto-generate CRUD API endpoints, as explained in the video below: It’s fast and intuitive, with a drag-and-drop interface and built-in connectors that simplify every aspect of a database API’s lifecycle, even for non-technical users.Start benefiting from API-driven connectivity today — schedule a demo or speak to our team for more information.

EDI VAN: Everything You Need to Know about Value-Added Networks (VAN) in EDI

The global EDI market, valued at USD 36.52 billion in 2023, has a projected compound annual growth rate (CAGR) of 12% from 2024 to 2032. As organizations worldwide increasingly turn to EDI to enhance their business processes, the role of VANs in facilitating seamless data interchange has garnered significant attention. This blog provides a comprehensive overview of EDI VANs, detailing their functionalities, benefits, and important aspects to consider when selecting the right EDI communication method. What Is an EDI VAN? EDI VANs are third-party service providers that manage electronic document exchanges between trading partners. VANs act as intermediaries that receive, store, and transmit EDI messages securely and efficiently. VANs add value by offering various services beyond basic data transmission, such as message tracking, error detection, and data translation. They help businesses manage their EDI communications and handle the technical challenges associated with direct EDI connections. What are the Benefits of Using an EDI VAN? Security and Compliance: VANs provide secure data transmission, protecting sensitive information during transit. They also comply with industry standards and regulations, such as the HIPAA for healthcare and the GDPR for data protection, which helps businesses meet compliance requirements. Data Translation and Integration: VANs offer data translation services, converting documents from one format to another to ensure compatibility between different EDI systems. This enables seamless integration with various trading partners, regardless of their EDI standards or software. Simplified Partner Onboarding: VANs streamline onboarding new trading partners by handling the technical aspects of EDI integration. This reduces the time and effort required to establish EDI connections, allowing businesses to expand their partner networks more quickly. Error Detection and Resolution: VANs provide detection and resolution services, identifying issues in EDI messages and alerting businesses to take corrective action. This reduces the risk of failed transactions and ensures that documents are processed accurately. Tracking and Reporting: VANs offer comprehensive tracking and reporting tools, allowing businesses to monitor the status of their EDI messages in real-time. This visibility helps companies manage their EDI operations more effectively and make informed decisions. Types of VANs in EDI VANs come in various forms, each offering different services and capabilities. The four most common types of EDI VANs are: Public VANs Public VANs are shared networks used by multiple businesses. Due to their shared infrastructure, they offer standard EDI services, such as data transmission, translation, and tracking, at a lower cost. Public VANs are suitable for small—to medium-sized businesses with moderate EDI needs. Private VANs Private VANs are dedicated networks used by a single organization or a group of related companies. They provide customized EDI services tailored to the organization’s specific needs and offer higher levels of security, reliability, and control. Private VANs are ideal for large enterprises with complex EDI requirements. Industry-Specific VANs Industry-specific VANs cater to the healthcare, retail, and automotive industries’ unique needs. They offer specialized EDI services and compliance features relevant to the industry, ensuring that businesses can meet regulatory requirements and industry standards. Cloud-Based VANs Cloud-based VANs use cloud computing technology to provide scalable and flexible EDI services. They offer on-demand access to EDI capabilities, allowing businesses to adjust usage based on their needs. Cloud-based VANs are easy to implement, making them suitable for businesses of all sizes. How Do VANs Work? A Step-by-Step Guide Document Preparation The sender prepares an EDI document, such as a purchase order or invoice, using their EDI software. For example, a company might create a purchase order for 100 units of a specific product formatted according to the 850 Transaction set of the X12 Standard, making it ready for transmission. Transmission to VAN The sender’s EDI system transmits the purchase order to the VAN. This transmission can be done via various communication protocols, such as FTP, AS2, or a dedicated EDI connection. The VAN receives the document and validates its format and content to ensure it meets the EDI 850 Transaction set of the X12 Standard. Data Translation If necessary, the VAN translates the purchase order from the sender’s EDI 850 format to the recipient’s format. This ensures that the purchase order is compatible with the recipient’s EDI system and can be processed correctly. Message Routing The VAN determines the appropriate routing for the purchase order based on the recipient’s information. Then it forwards the document to the recipient’s EDI system via the specified communication protocol. Acknowledgment and Tracking Upon successful delivery, the VAN generates an acknowledgment message (999 transaction set) confirming receipt of the purchase order by the recipient. The message also includes information about whether the file has any errors. The sender can track their purchase order status through the VAN’s reporting tools, ensuring transparency and accountability. Error Handling If any issues are detected during transmission or processing, the VAN notifies the sender and provides details about the error. Limitations of VANs While EDI VANs offer numerous benefits, they also have certain limitations that businesses should consider: Cost: VANs can be expensive, especially for small businesses with limited EDI requirements. The costs associated with using a VAN include setup, subscription, and transaction fees, which can add up over time. Dependency on Third Parties: Relying on a third-party VAN means businesses have less control over their EDI operations. Any issues or downtime experienced by the VAN can impact the business’s ability to send and receive EDI messages. Complexity: Using a VAN adds a layer of complexity to EDI communications. Businesses must manage their interactions with the VAN and ensure that their EDI documents are properly formatted and transmitted. Limited Flexibility: VANs may offer a different flexibility and customization than EDI integration solutions. Businesses with unique or highly specific EDI requirements may need help configuring the VAN to meet their needs. Limited Strategic Visibility: EDI VANs often need more transparency than other systems provide. Tracking audit trails or investigating data issues can be difficult, especially with interconnected VAN networks. This lack of visibility creates a “black box” effect, leaving customers uncertain about their EDI transactions. They must rely on the provider to handle connections, updates, and communications, particularly during maintenance or service disruptions. Alternatives to a VAN for EDI While VANs have been a popular choice for EDI communication for many years, they may not be the optimal solution for every organization. Factors such as budget, scalability, and control over infrastructure need careful consideration. Businesses seeking alternatives to VANs for their EDI communications have several options to consider, such as: EDI via AS2 AS2 (Applicability Statement 2) is an Internet communications protocol that enables data to be transmitted securely over the Internet. By using AS2, businesses can establish direct connections with their trading partners, reducing the dependency on third-party providers. AS2 offers strong security features, such as encryption and digital signatures, making it a suitable option for businesses with stringent security +requirements, such as businesses operating in the retail and manufacturing industries. However, AS2 requires technical expertise and resources to implement and manage. EDI via FTP/VPN, SFTP, FTPS FTP, SFTP, and FTPS are commonly used communication protocols for the exchange of EDI documents via the Internet. These protocols can be used to connect to business partners directly (Direct EDI) or via an EDI Network Services Provider. Each protocol offers secure data transmission, catering to different security and infrastructure needs. Web EDI Web EDI is an approach to EDI that leverages Internet technologies to facilitate the electronic exchange of business documents. This methodology eliminates the need for costly VAN services by using the web for communication. It is more suited for companies that only need to use EDI occasionally. EDI Integration Platforms EDI integration platforms offer cloud-based EDI services with advanced features such as data translation, automation, and integration with ERP systems. These platforms provide a modern and flexible alternative to traditional VANs, supporting various EDI standards and protocols. They often include built-in compliance tools to ensure industry-specific regulations and standards adherence. These platforms offer scalability, allowing businesses to expand their EDI capabilities as they grow without significant upfront investment. EDI VAN vs. Modern EDI Tools Modern EDI integration tools offer several advantages over traditional VANs, making them a compelling choice for businesses seeking to enhance their EDI capabilities. The following chart compares EDI VANs and modern EDI tools: Feature/Aspect EDI VAN Modern EDI Tools Cost Typically higher due to subscription fees and per-transaction charges. Generally lower; pay-as-you-go or subscription-based. Setup and Maintenance Complex setup, often requiring specialized IT knowledge. Easier setup with user-friendly interfaces and automated updates. Scalability Limited; scaling can be expensive and time-consuming. Highly scalable, especially with cloud-based solutions. Flexibility Less flexible, often rigid in terms of integration and customization. Highly flexible with API integrations and customization options. Speed of Implementation Slower, due to complex setup and configuration. Faster, with quick deployment and easy integration. Reliability High reliability with guaranteed delivery and tracking. High reliability, but depends on the provider and internet connectivity. Compliance Generally compliant with industry standards (e.g., HIPAA, EDIFACT). Also compliant, with frequent updates to meet new standards. Data Management Basic data management capabilities. Advanced data analytics and reporting features. Partner Connectivity Often requires separate connections for each partner. Simplified partner management with unified connections. Innovation and Updates Slower to adopt new technologies. Rapid adoption of new technologies and frequent updates. Support Traditional support with potential delays. Real-time support and extensive online resources. Why Choose Modern EDI Tools Over VANs? Cost Efficiency: Modern EDI tools can be more cost-effective, especially for small to medium-sized businesses, as they often have lower upfront costs and flexible pricing models. Ease of Use: Modern tools offer user-friendly interfaces, making it easier for non-technical users to manage EDI processes without extensive IT support. Integration: Modern EDI solutions seamlessly integrate with other business applications (e.g., ERP, CRM) through APIs, enhancing overall business process efficiency. Scalability: Cloud-based EDI solutions can easily scale to accommodate growing business needs, whereas traditional EDI VANs may require significant investment to scale. Security and Compliance: EDI VAN and modern EDI tools offer robust security features but ensuring that the chosen solution meets specific industry compliance requirements is essential. Futureproofing: Modern EDI tools are better equipped to adopt new technologies and standards, ensuring long-term viability and competitiveness How LIKE.TG Improves EDI Management LIKE.TG, a leading provider of no-code data integration solutions, offers an end-to-end automation solution for transferring and translating EDI messages. It enables seamless connectivity with all EDI partners via the cloud or internal systems and supports various industry standards like X12, EDIFACT, and HL7. Key highlights of the solution include: Seamless Connectivity: Connects with all EDI partners across various industry groups, ensuring secure data exchange and integration. Quick Onboarding: Simplifies the onboarding of trading partners, internal systems, and cloud applications, ensuring reliable connectivity and integration. Cost Reduction: Reduces ownership costs by eliminating the need for dedicated EDI experts and Value-Added Networks (VANs). Versatile Communication Protocols: Supports multiple protocols like AS2, FTP, SFTP, and APIs, enabling real-time file ingestion from trading partners. Scalability and Flexibility: Offers scalable and flexible deployment options, supporting both on-premises and cloud environments. Automated Workflows: Provides automated processes for loading, validating, and transforming EDI transactions, keeping organizations aligned with partner requirements. Final Words VANs have historically been essential for secure and efficient EDI communications. While traditional VANs offer numerous benefits, modern EDI tools provide greater flexibility, cost-efficiency, and advanced features. LIKE.TG’s EDIConnect provides a comprehensive, no-code platform that simplifies EDI management, automates workflows, and ensures seamless integration with trading partners. Ready to optimize EDI operations? Request a personalized demo of LIKE.TG EDIConnect today and discover how it can revolutionize and streamline your EDI management.

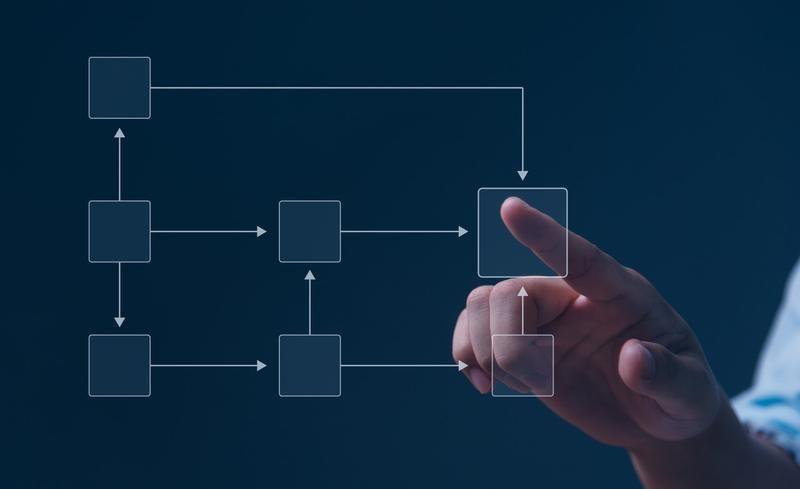

What is Data Architecture? A Look at Importance, Types, & Components

What is Data Architecture? Data architecture is a structured framework for data assets and outlines how data flows through its IT systems. It provides a foundation for managing data, detailing how it is collected, integrated, transformed, stored, and distributed across various platforms. It also establishes standards and guidelines for data handling, creating a reliable and scalable environment that supports data-driven activities. Why is Data Architecture Important? Data architecture is important because designing a structured framework helps avoid data silos and inefficiencies, enabling smooth data flow across various systems and departments. This improved data management results in better operational efficiency for organizations, as teams have timely access to accurate data for daily activities and long-term planning. An effective data architecture supports modern tools and platforms, from database management systems to business intelligence and AI applications. It creates a space for a scalable environment that can handle growing data, making it easier to implement and integrate new technologies. Moreover, a well-designed data architecture enhances data security and compliance by defining clear protocols for data governance. Here are some business benefits that make data architecture an essential part of a data strategy: Better data management Data architecture establishes a clear framework for handling data, ensuring it’s organized, accurate, and consistent. This structured approach reduces errors and duplicates, making data more accessible to maintain and access. Efficient data management improves operational performance and cuts down on costs related to data handling. Easier data integration A unified structure and common standards within a data architecture environment simplify data integration. This consistency makes it easy to combine data from different sources into a single, usable format. This seamless integration allows businesses to quickly adapt to new data sources and technologies, enhancing flexibility and innovation. Supports decision-making A robust data framework ensures that accurate and timely information is available for decision-making. It helps create reliable data pipelines and storage solutions, delivering insights when needed. With dependable data, businesses can quickly respond to changes, find new opportunities, and make insightful decisions. Types of data architectures Data architectures can be broadly categorized into two main types: centralized and distributed architectures. Each type offers distinct patterns and benefits depending on the organization’s data strategy and requirements. Centralized data architectures Centralized data architectures focus on organizing data storage in a single repository, providing a unified view of business data across various functions. This approach simplifies data management and access, making it easier to maintain consistency and control. These data architectures include: Data Warehouse: A data warehouse is a central repository that consolidates data from multiple sources into a single, structured schema. This design allows quick access and analysis, making it ideal for BI and reporting. It organizes data for efficient querying and supports large-scale analytics. Data warehouse architecture defines the structure and design of a centralized repository for storing and analyzing data from various sources. It includes data modeling, ETL processes, and storage mechanisms tailored to support business intelligence and decision-making. Data Mart: Data marts are specialized segments of data warehouses tailored for specific business lines or functions, such as sales or finance. They provide focused data views that enable quicker access and targeted analysis, improving decision-making for specific departments without the need to query the entire warehouse. Data Lake: A data lake stores vast amounts of raw data in its native format, accommodating various data types and structures. Unlike data warehouses, data lakes maintain an undefined structure, allowing for flexible data ingestion and storage. This setup supports diverse analytics needs, including big data processing and machine learning. Distributed Data Architectures Distributed data architectures manage data across multiple platforms and processes, creating a unified view. They also provide the flexibility and domain-specific advantages of different systems. This approach enhances scalability, interoperability, and sharing capabilities. Some common distributed data architectures include: Data Mesh: A data mesh is an architecture in which data ownership and management are decentralized to individual business domains or teams. This model empowers each domain to control and govern its data, ensuring it meets specific business needs and quality standards. Data Fabric: Data fabric uses intelligent and automated algorithms to integrate and unify disparate data across systems. It provides a seamless access layer that enhances integration across the organization. This architecture adapts as the organization grows, offering scalable and efficient data connectivity. Data Cloud: A data cloud is a cloud-based infrastructure that enables companies to store, manage, and analyze data across multiple cloud environments and services. It uses scalable cloud resources to handle diverse data workloads, from storage and processing to analytics and ML. Each type of data architecture—centralized or distributed—has unique strengths and use cases. The choice will depend on the organization’s specific needs, data strategy, and the complexity of its data assets and infrastructure. Data Architecture vs. Data Modeling vs. Information Architecture Data Architecture Data Architecture is the foundational design that specifies how an organization structures, stores, accesses, and manages its data. It involves decisions on data storage technologies—like databases or data lakes—integration to gather data from various sources and processing for data transformation and enrichment. Data architecture also includes governance policies for data security, privacy, and compliance to ensure data integrity. Scalability considerations are essential to accommodate growing data volumes and changing business needs. Data Modeling Data modeling is a technique for creating detailed representations of an organization’s data requirements and relationships. It ensures data is structured to support efficient storage, retrieval, and analysis, aligning with business objectives and user needs. Information Architecture Information architecture is an approach that focuses on organizing and structuring information within systems to optimize usability and accessibility. It involves creating a logical framework to help users find and understand information quickly and easily through data hierarchies and consistent categorization methods. Key Components of Data Architecture These key components of data architecture make the fundamental framework that organizations rely on to manage and utilize their data effectively: Data Models Data architecture begins with data models, which represent how data is structured and organized within an organization. These models include: Conceptual Data Model: Defines high-level entities and relationships between them. Logical Data Model: Translates conceptual models into more detailed structures that show data attributes and interdependencies. Physical Data Model: Specifies the actual implementation of data structures in databases or data warehouses, including tables, columns, and indexes. Data Storage Data architecture includes decisions on where and how data is stored to ensure efficient access and management. The storage solution is chosen based on the organization’s data type, usage patterns, and analytical requirements. Some popular data storage options are databases, data lakes, and data warehouses. Data Integration and ETL Data integration processes are critical for consolidating data from disparate sources and transforming it into formats suitable for analysis and reporting. ETL processes streamline these operations, ensuring data flows seamlessly across the organization. It involves three stages: Extract: Retrieving data from various sources, such as databases, applications, and files. Transform: Converting and cleaning data to ensure consistency and quality, often through data enrichment, normalization, and aggregation. Load: Loading transformed data into target systems like data warehouses or data lakes for storage and analysis. Data Governance Data governance helps establish policies, procedures, and standards for managing data assets throughout their lifecycle. Implementing robust governance frameworks allows organizations to mitigate risks, optimize data usage, and enhance trust in data-driven decision-making. Some key attributes of data governance are: Data Quality: Ensures data accuracy, completeness, consistency, and relevance through validation and cleansing processes. Data Lifecycle Management: Defines how data is created, stored, used, and retained to maintain data integrity and regulatory compliance. Metadata Management: Maintains descriptive information about data assets to ensure understanding, discovery, and governance. Data Security Implements measures to protect data from unauthorized access, manipulation, and breaches. Robust security helps companies mitigate risks, comply with regulatory requirements, and maintain the trust and confidentiality of their data assets. Controlled Access: Restricts access to data based on roles and authentication mechanisms. Encryption: Secures data in movement and at rest using encryption algorithms to prevent unauthorized interception or theft. Auditing and Monitoring: This department tracks data access and usage activities to detect and respond to security breaches or policy violations. These components establish a structured approach to handling data, enabling organizations to gain actionable insights and make informed decisions. How to Design Good Data Architecture Designing good data architecture is crucial because it lays the foundation for how an organization manages and uses its data. Organizations must create a robust framework supporting existing operations and creating a space for innovation and scalability for future growth. Here are some key factors to keep in mind: Understanding the data needs When designing good data architecture, understanding data needs is foundational. Organizations must thoroughly assess their data requirements, including volume, variety, and velocity, to ensure the architecture effectively supports operational and analytical insights. Creating data standards Next, establishing data standards is crucial for coherence across the organization. This step involves preparing clear guidelines on naming conventions, data formats, and documentation practices. It streamlines data integration and analysis processes, minimizing errors and enhancing overall data quality. Choosing the right storage and tools Choosing suitable storage solutions and tools is a strategic decision. Organizations should evaluate options like relational databases for structured data, data lakes for scalability and flexibility, and data warehouses for analytical capabilities. This choice should align with scalability, performance needs, and compatibility with existing IT infrastructure. Ensuring data security and compliance Data security and compliance are also critical in designing effective data architecture. Organizations must implement stringent measures to safeguard sensitive information and maintain regulatory compliance, such as GDPR or HIPAA. This step includes employing encryption techniques to protect data, implementing strong access controls, and conducting regular audits. Use cases Aligning with specific use cases is essential for effective data architecture. Whether supporting real-time analytics, historical data analysis, or machine learning applications, an adaptable architecture meets diverse business needs and enables informed decision-making. Best Practices for Data Architecture Here are five best practices for data architecture: Collaborate across teams: Collaboration between IT, business stakeholders, and data scientists helps ensure that data architecture meets technical and business requirements, promoting a unified approach to data management. A no-code solution allows different stakeholders to be involved in this process, regardless of their technical proficiency. Focus on data accessibility: Organizations must design architecture that prioritizes easy access to data for users across different departments and functions. This step would require implementing intuitive UI and user-friendly solutions that enable easy navigation and retrieval of data across the organization. Implement Data quality monitoring: Continuous monitoring and validation processes help maintain high data quality standards, ensuring that data remains accurate, reliable, and valuable for analytics and reporting. Adopt agile methodologies: Applying agile principles to data architecture projects allows for iterative development, quick adjustments to changing business needs, and delivery of valuable insights to stakeholders. A unified, no-code solution is ideal for this approach as it eliminates the complex coding requirements that can lead to bottlenecks and delays. Embrace data governance: Organizations must establish clear roles, responsibilities, and accountability for data governance within the organization. It promotes transparency and trust in data handling practices. Conclusion A solid data architecture isn’t just a good idea—it’s essential. It works as a well-organized toolbox, helping organizations work faster and more efficiently. Without it, managing data becomes complex, and decision-making suffers. Investing in building a smart data architecture allows organizations to streamline operations and work toward innovation and growth.

Legacy System: Definition, Challenges, Types & Modernization

Over 66% of organizations still rely on legacy applications for their core operations, and more than 60% use them for customer-facing functions. This widespread dependence on outdated technology highlights the significant role legacy systems play in modern business environments. Despite their critical functions, these systems also lead to increased maintenance costs, security vulnerabilities, and limited scalability. This blog will discuss why organizations continue to use legacy systems, the risks involved, and practical strategies for transitioning to modern alternatives. What is a Legacy System? A legacy system refers to an outdated computer system, software, or technology still in use within an organization despite the availability of newer alternatives. These systems are typically built on outdated hardware or software platforms. They may need more modern features and capabilities than newer systems offer. Legacy systems are often characterized by age, complexity, and reliance on outdated programming languages or technologies. Type and Examples of Legacy Systems Legacy systems can come in various forms and can be found across different industries. Some common types of legacy systems include: Mainframe Systems Description: Large, powerful computers used for critical applications, bulk data processing, and enterprise resource planning. Example: IBM zSeries mainframes are often found in financial institutions and large enterprises. Custom-Built Systems Description: Systems explicitly developed for an organization’s unique needs. These often lack proper documentation and require specialized knowledge to maintain and update. Example: An inventory management system developed in-house for a manufacturing company. Proprietary Software Description: Software applications or platforms developed by a particular vendor may have become obsolete or unsupported over time. Example: An older version of Microsoft Dynamics CRM that Microsoft no longer supports. COBOL-Based Applications Description: Applications written in COBOL (Common Business-Oriented Language), often used in banking, insurance, and government sectors. Example: Core banking systems that handle transactions, account management, and customer data. Enterprise Resource Planning (ERP) Systems Description: Integrated management of main business processes, often in real-time, mediated by software and technology. Example: SAP R/3, which many companies have used for decades to manage business operations. Customer Relationship Management (CRM) Systems Description: Systems for managing a company’s interactions with current and future customers. Example: Siebel CRM, used by many organizations before the advent of cloud-based CRM solutions. Supply Chain Management (SCM) Systems Description: Systems used to manage the flow of goods, data, and finances related to a product or service from the procurement of raw materials to delivery. Example: Older versions of Oracle SCM that have been in use for many years. Healthcare Information Systems Description: Systems used to manage patient data, treatment plans, and other healthcare processes. Example: MEDITECH MAGIC, used by many hospitals and healthcare facilities. What Kind of Organizations Use Legacy Systems? A wide range of organizations across industries still use legacy systems. Some examples include: Financial institutions: Banks and insurance companies often rely on legacy systems to manage their core operations, such as transaction and loan processing. Government agencies: Government bodies at various levels also use legacy systems to handle critical functions such as taxation, social security, and administrative processes. Healthcare providers: Hospitals, clinics, and healthcare organizations often have legacy systems for patient records, billing, and other healthcare management processes. Manufacturing companies: Many manufacturing firms continue to use legacy systems to control their production lines, monitor inventory, and manage supply chain operations. Educational institutions: Schools, colleges, and universities also use legacy systems for student information management, course registration, and academic record-keeping. These systems, sometimes decades old, have been customized over the years to meet the specific needs of the educational institution. Challenges Risks Associated with Legacy Systems While legacy systems provide continuity for organizations, they also present numerous challenges and risks: Maintenance and Support The U.S. Federal Government spends over $100 billion annually on IT investments. Over80% of these expenses are dedicated to operations and maintenance, primarily for legacy systems. These outdated systems often have high maintenance costs due to the skills and specialized knowledge required to keep them running. As these systems age, finding experts to maintain and troubleshoot them becomes increasingly difficult and expensive. Additionally, vendor support for legacy systems diminishes over time or ceases entirely, making it challenging to obtain necessary patches, updates, or technical assistance. Integration Issues Integrating legacy systems with modern software and hardware poses significant challenges. These older systems may need to be compatible with newer technologies, leading to inefficiencies and increased complexity in IT environments. Legacy systems can also create data silos, which reduces information sharing and collaboration across different departments within an organization, thus impacting overall operational efficiency. Security Vulnerabilities One of the most critical risks associated with legacy systems is their vulnerability to security threats. These systems often no longer receive security updates, exposing them to cyberattacks. Additionally, legacy systems may need to support modern security protocols and practices, increasing the risk of data breaches and other incidents that can have severe consequences for an organization. Performance and Scalability Over the past five years, over58% of website visits have come from mobile devices. However, many older systems still lack proper mobile optimization. Legacy systems frequently suffer from decreased performance as they struggle to handle current workloads efficiently. This drawback often leads to slow load times, poor user experiences, and increased bounce rates. Furthermore, scaling legacy systems to meet growing business demands can be difficult and costly, limiting an organization’s ability to expand and adapt to market changes. Compliance and Regulatory Risks About42% of organizations view legacy IT as a significant hurdle in compliance with modern regulations, such asGDPR (General Data Protection Regulation)andCCPA (California Consumer Privacy Act). Older systems may need to comply with current regulatory requirements, which can result in legal and financial penalties for organizations. Ensuring compliance with regulations becomes more challenging with outdated systems, and conducting audits can be more complicated and time-consuming. This non-compliance risk is significant, especially in highly regulated industries. Operational Risks The reliability of legacy systems is often a concern, as they are more prone to failures and downtime, which can disrupt business operations. System failures can severely impact business continuity, especially if there are no adequate disaster recovery plans. This unreliability can lead to operational inefficiencies and the loss of business opportunities. Innovation Stagnation Relying on outdated technology can hinder an organization’s ability to adopt new technologies and processes, limiting innovation and competitive advantage. Employees working with legacy systems may experience frustration, affecting morale and productivity. This stagnation can prevent an organization from keeping up with industry advancements and customer expectations. Data Management Legacy systems might not support modern data backup and recovery solutions, increasing the risk of data loss. Ensuring the accuracy and integrity of data can be more difficult with older systems that need robust data management features. This risk can lead to potential data integrity issues, affecting decision-making and operational efficiency. Transition Challenges Migrating from a legacy system to a modern solution is often complex and risky, requiring significant time and resources. The transition process involves various challenges, including data migration, system integration, and change management. Additionally, the knowledge transfer from older employees familiar with the legacy system to newer staff can be challenging, further complicating the transition. Why Are Legacy Systems Still in Use Today? Despite their age and limitations, legacy systems are crucial in many organizations. They often house vast amounts of critical data and business logic accumulated over years of operation. Legacy systems continue to be utilized for many reasons: Cost of replacement: Replacing a legacy system can be complex and expensive. It may involve migrating immense amounts of data, retraining staff, and ensuring compatibility with other systems. Business-critical operations: Legacy systems often handle essential functions within an organization. The risks of interrupting or replacing them can be too high to justify the switch. Interdependencies: Legacy systems may be tightly integrated with other systems, making them difficult to replace without disrupting the entire ecosystem. How to Modernize Legacy Systems Modernizing legacy systems is critical for many organizations to stay competitive and responsive to dynamic market conditions. While often reliable, legacy systems can be costly to maintain, complex to scale, and incompatible with modern technologies. These outdated systems can hinder innovation and agility, making it challenging to implement new features, integrate with contemporary applications, or leverage advanced technologies such as analytics, cloud computing, and artificial intelligence. Addressing these challenges through modernization efforts enhances operational efficiency and improves security, user experience, and overall business performance Here are several strategies to modernize legacy systems effectively: Replatforming: Modify the underlying platform (e.g., move from a monolithic architecture to microservices) without changing the application’s core functionality. Refactoring: Rewrite parts of the application to improve its structure and performance without changing external behavior. Rearchitecting: Redesign the application architecture to leverage modern frameworks, patterns, and platforms. Encapsulation: Expose the legacy system’s functionality as services (APIs) to integrate with new applications. Data Migration: Migrate data from legacy software to modern databases or data warehouses and integrate with new systems. Modernize Your Legacy System With LIKE.TG Legacy systems, while crucial, can limit growth and innovation. Modernizing these systems is essential for improved business performance. Legacy system modernization is a complex initiative that requires a strategic approach, the right tools, and expertise to ensure a successful transition. This scenario is where LIKE.TG can be beneficial. LIKE.TG is a leading data integration and management solutions provider that empowers organizations to modernize and transform their legacy systems. With LIKE.TG’s powerful data integration and API Management platform, organizations can seamlessly migrate, replatform, encapsulate, or replace legacy systems while minimizing disruption and maximizing return on investment. LIKE.TG supports various file formats and protocols, ensuring smooth data flow and seamless integration with databases like Oracle and cloud platforms such as AWS S3. Its ETL/ELT capabilities ensure accurate and standardized data migration. Moreover, LIKE.TG’s intuitive API management allows for accessible building, testing, and deployment of custom APIs, which is essential for integrating legacy systems with modern applications. With LIKE.TG, organizations can confidently transition from legacy systems to modern infrastructures, ensuring their data is reliable, accessible, and ready to meet current and future business needs. Sign up for a personalized demo today!

The 12 Best API Monitoring Tools to Consider In 2024